Tool calling is the missing piece that lets AI move from conversation to execution by safely connecting models to real systems.

If you know what tool calling means, congratulations. You’re probably either a developer or someone deeply interested in AI. If you don’t know, no need to feel FOMO. Welcome to the strange new world of humanity trying to fit machines into its daily work.

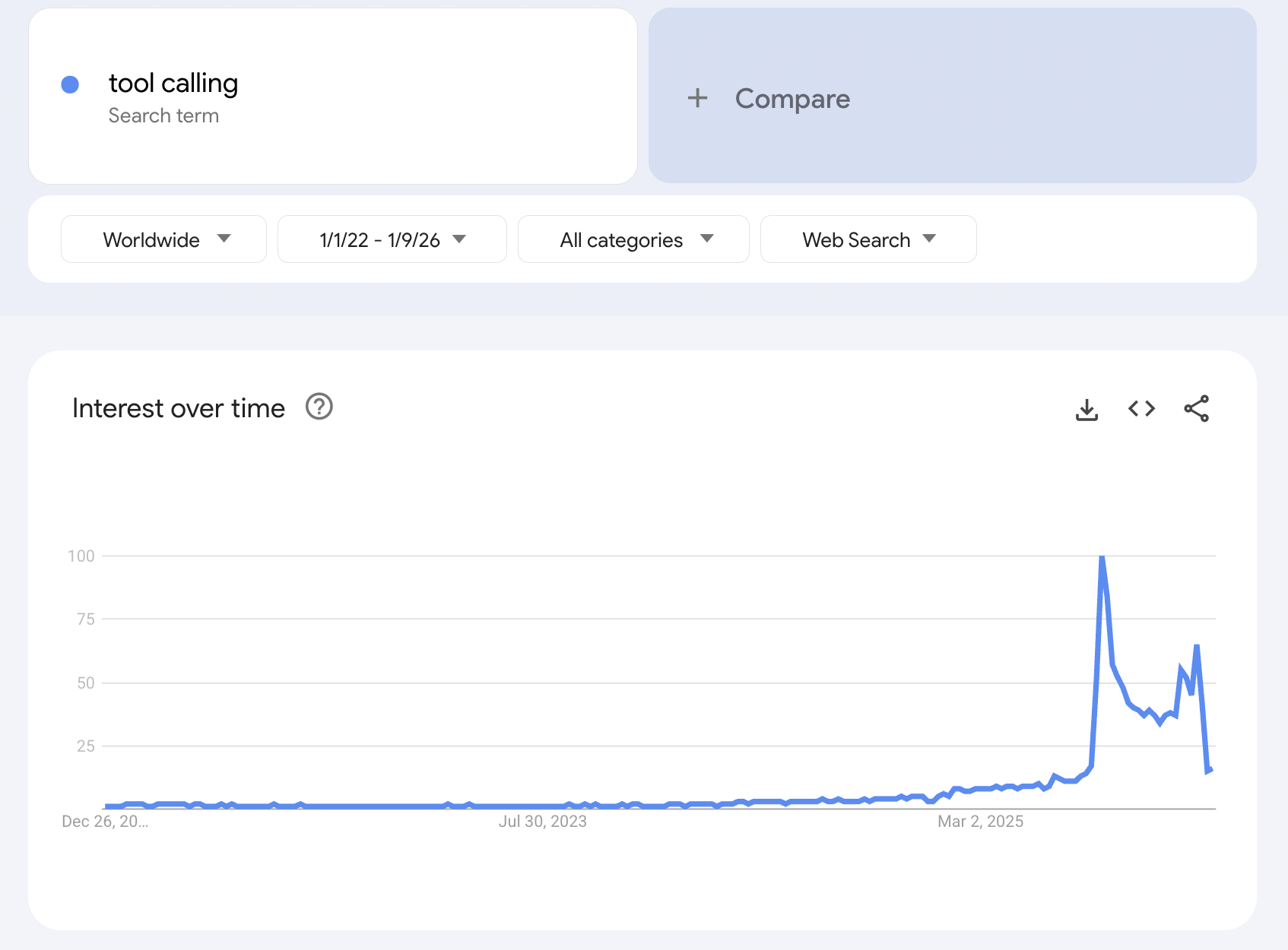

You’re only about half a year late. The term tool calling suddenly took off in August 2025, when searches for it spiked sharply.

One likely trigger was the MIT NANDA GenAI Divide report, which revealed that 95% of enterprise AI projects were failing to deliver ROI. The problem wasn’t model intelligence; it was integration. Companies realized their AI couldn’t do anything because it couldn’t reliably connect to real systems. Tool calling emerged as the thing everyone sensed was missing, but few could clearly explain.

So, what is tool calling, actually?

For its first couple of years, AI could sweet-talk you well enough that you didn’t mind copy-pasting into chat windows. But unless you did the manual work of stitching everything together, AI couldn’t really act. You could ask it what to present in a meeting or how to phrase an email. But ask it to book a meeting, update a spreadsheet, or pull data from three different systems, and it would politely decline. Or it would ask for access and then fail.

Tool calling is what changes that. At its core, tool calling is the mechanism that lets AI use external software (tools) to retrieve data or take actions. The AI decides when a task requires a tool, which tool is relevant, and what information that tool needs to do its job. It then receives the result and continues reasoning from there.

Behind the scenes, tools are exposed to the AI in a structured way, most commonly using JSON schemas. Instead of generating prose, the AI produces a structured instruction, called a “function call”, with the required inputs. Your system executes that action, returns the result, and the AI decides what to do next. This loop can repeat multiple times within a single task.

Earlier AI was in a way like an external consultant sending you detailed instructions that you had to find ways to implement. AI with tool calling is closer to a colleague who can actually log into systems and get the work done. Not everywhere and not with unlimited access. But with the specific tools needed for the task at hand. Through a very human loop of “decide, act, observe, continue”, AI moves from impressive conversation to real execution.

Sounds too good to be true?

In demos, tool calling looks magical. The AI fetches calendars, sends emails, updates records, all in seconds. In production, frustration quickly follows.

Real work is multi-step. Most tasks involve sequences: authenticate, fetch data, validate inputs, update records, confirm success. Scheduling a meeting alone means checking calendars, finding a slot, sending invites, and adding a video link. Lose context midway, and suddenly the week goes by without a meeting.

Real systems are messy. Credentials expire. Permissions vary. APIs return noisy responses. Errors are often vague. An agent tries to update a customer record and gets “Invalid schema.” Did a field change? Is something missing? Humans infer. Machines guess.

Failure compounds quickly. Small misunderstandings can spiral into retries, loops, rate limits, or false success. When an AI claims it completed a task that didn’t actually happen, trust evaporates. Not in the model’s intelligence, but in its ability to act reliably.

Single-step tool calls usually work. Problems show up when you chain five or ten actions across different systems. That’s where impressive demos turn into operational headaches.

Will these problems be solved?

They have to be. Too much AI potential depends on it. And what the industry is learning is that production-grade tool calling isn’t about smarter models. It’s about better infrastructure:

Clean tool discovery. Agents need to see the right tools for the task, not every tool available.

Smart context management. Tool outputs must be filtered and compressed so irrelevant data doesn’t overwhelm the model or drive up costs.

Governed authentication. Agents need scoped, temporary access that doesn’t break mid-workflow or turn into a security risk.

Observable execution. When something breaks, teams must see exactly what was called, with what inputs, and what happened next.

Runtime guardrails. Some controls must be enforced outside the model to prevent misuse, including the “confused deputy” problem, where agents are tricked into acting beyond their authority.

Without these foundations, AI stays stuck at the demo stage. With them, it becomes something organizations can trust with real work.

What good tool calling unlocks

When tool calling works reliably, the results may not be material for the next Hollywood blockbuster, but they sure are transformative for the workplace:

- Pull the latest performance data from product analytics, update the spreadsheet, and draft a short summary. Done in seconds instead of half an hour.

- Refresh the data, build the slides, and share the updated deck with the team. A weekly reporting ritual that takes half a day becomes a background task.

- Summarize AWS billing changes for the month and flag anything growing faster than expected. All with absolutely zero time spent deciphering complicated dashboards.

Work that completes in the background without constant human babysitting. That’s the real promise of tool calling: removing the invisible friction that fills people’s days. We will spare you the already cliché “so that you can focus on what matters most: strategy!” You know better what to do with that freed Friday afternoon.

Where Nexus comes in

The future of AI isn’t about systems that sound smarter. It’s about systems reliable enough that you stop thinking about how they work at all. Tool calling is the mechanism that makes that future possible.

At Civic, we believe Nexus delivers the best tool calling in the industry. Of course, we may be biased. But after months of working with clients and teams, our goal has remained simple: making sure “it worked great in the demo” is always followed by “it works every day.”

If we’re right, you’ll notice quickly once you try it. And if we’re not, we genuinely want to hear why.